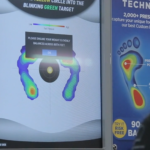

It's in nearly every state nationwide. The Dr. Scholl's interactive kiosk is found in CVS / Walgreens / Walmart & more. I was brought in to totally redesign the new user experience for the new Dr. Scholl's kiosk, which was also adding brand new technology for the first time. Over 5,000 units would be created off my architectural UI and interactive UX designs and animating elements.The main objective of this project was to allow the consumer in need of an insole or knee/ankle brace to get a Custom Fit product with our newly invented touch-less gesture scanning, which I helped calibrate. I worked jointly over design and development very closely for over 2 years, which included user testing studies and updates. The old kiosk was transformed, while still keeping the basic concept alive. The entire UI and UX design I created, transformed the users journey into a very clean, streamlined, kiosk experience, which gathered information for the client, offered sale coupons, offered a perfect fit brace or orthopedic insole, and had more in depth information about your scan and why the ‘Custom Fit’ product was the best for you. With technology that could scan and create a 3D avatar of a user, I overlapped with Development to help maintain a very high level of professional design for every screen.

Dr. Scholl’s is a footwear and foot care brand owned by Bayer in the North and Latin American markets. Founded originally in the year 1906, the brand is sold in almost all leading pharmacies in the USA. Today Dr. Scholl’s products feature a complete family of brands that together form a one stop shop for foot care & wear.

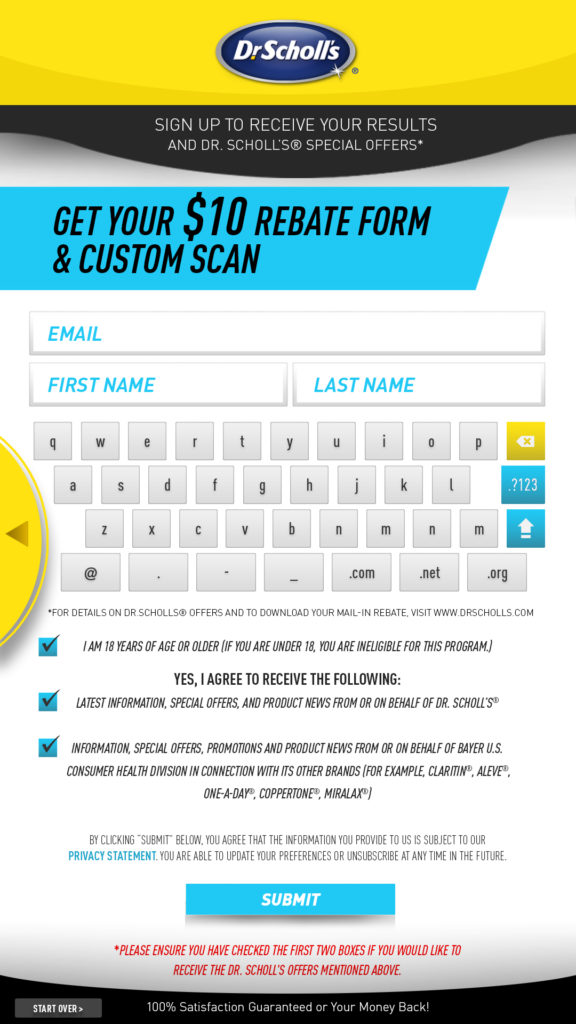

The UI/ UX and total Design architecture was created by me, helped Dr Scholl’s to come up with a POC for in store Kiosks that aid customers by giving personalized product recommendations that further engaged users in a streamlined brand experience, based on their own personal scientific data.

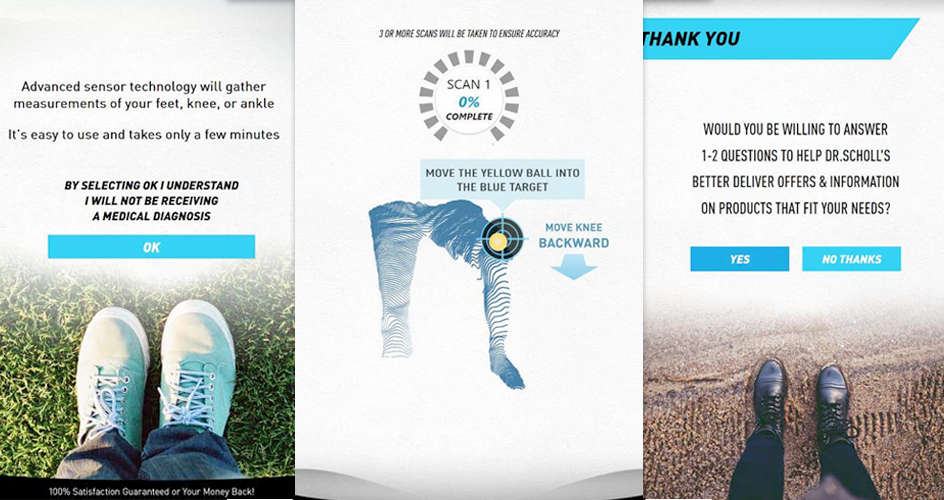

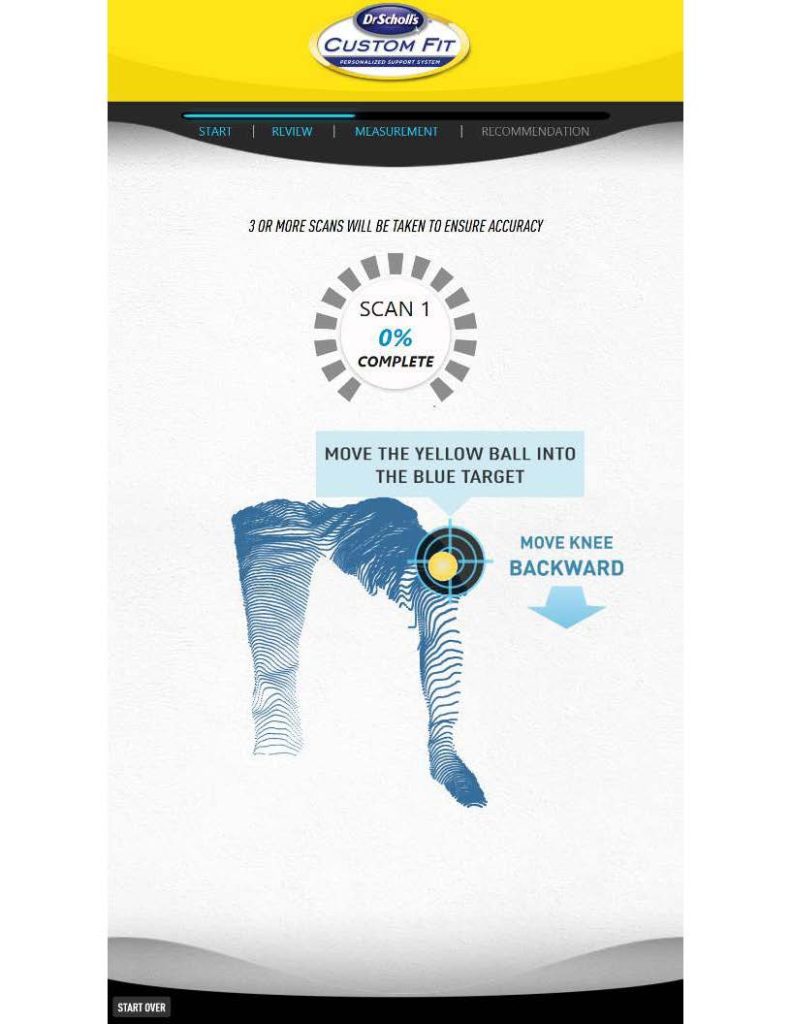

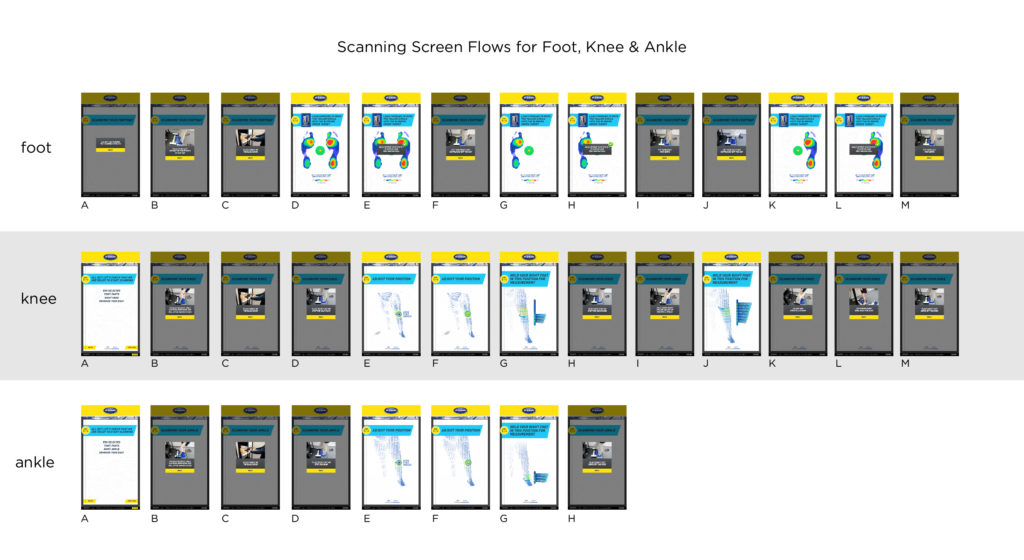

The overall UI was developed in a way to cut down on clicks and text, so a user could quickly get through the experience. Working also along the technical aspects of scanning a users leg, ankle and foot, I directed all 3d modeling and animation videos to ensure consistency and clear user interface.

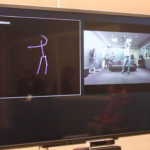

Non-contact human body measurement plays an important role in physical healthcare and specifically this kiosk. Current methods for measuring the human body without physical contact usually cannot handle humans wearing clothes, which limits their applicability for accurate biometric measurements. The ‘Next Gen Kiosk’ uses Microsoft Kinect for a Windows v2 sensor [a physical device with depth sensing technology, a built-in color camera, an infrared (IR) emitter, and a microphone array] enabling it to sense the location and movements of people as well as their voices. Microsoft Kinect for Windows v2 can track up to six people within its view as whole skeletons with 25 joints. Skeletons can be tracked whether the user is standing or seated.

The Next Gen Kiosks were designed by myself and my development partner and assisted along with our offshore support teams. The Next Gen Kiosk scans user’s feet, knees and ankle biometrics, suggesting the right custom product to purchase based on the data collected & analyzed.

• Sales of Orthotics rose high after the introduction of our new Next Gen Kiosk units.

• Customer Reviews scored very well with the new design and functionality.

• A comprehensive analytic dashboard was also created to monitor usage, completion & drop off data of customers that further helped in improving the UI/UX experience.

In addition to the large motions, the effect of clothing was another challenge for accurate body measurement on the kiosk. Another layer of UI/UX design was integrated to create a visual journey of a 3d live screen scan, processing of the scan, and a final output of data and product recommendation which are shelved on the kiosk itself.

We learned that the Kinect Avatar can partly capture the average geometry of clothing, but it cannot measure accurate parameters of the human body under the clothes. The UX had to be enhanced to help the user visually get through the scan. Kinect does not necessarily obtain precise segment lengths during motion. When used as a game it is maybe not a big deal, but for potential future bio-mechanical application this is a problem. In order to get precise results with the Kinect a kind of calibration (a simultaneous recording of each particular motion with both MMC and MBS) is required to estimate regression equations that would be used to correct these results.

We built the Kiosk utilizing a Microsoft Kinect to revolutionize the way a user interacts and how a user experiences the new kiosk. Our project was one of the first few to start using Kinect in ways never imagined. From helping a user with a disability, to helping doctors in the hospital room, people are taking Kinect beyond games. The technology was new and it had never been calibrated to operate in the way we envisioned.

Originally developed incorporating RGB cameras, infrared projectors and detectors that mapped depth through either structured light or time of flight calculations, and a microphone array, along with software and artificial intelligence from Microsoft to allow the device to perform real-time gesture recognition, speech recognition and body skeletal detection for up to four people, among other capabilities. This enables Kinect to be used as a hands-free natural user interface device to interact with a computer system. –No touch gesture UI.

Microsoft now considers non-gaming applications, such as in robotics, medicine, and health care, the primary market for Kinect. Our project among others, further led to prototype demos of other possible applications, such as a gesture-based user interface for the operating system similar to that shown in the film Minority Report.

We later upgraded to Microsoft's release to a non-gaming version Azure Kinect, which incorporates Microsoft Azure cloud computing applications among the device's functionalities. Part of the Kinect technology was also used within Microsoft's Hololens project.

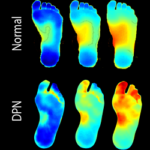

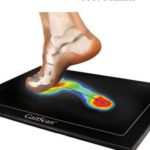

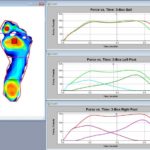

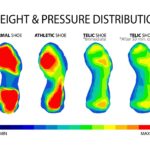

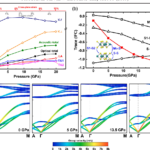

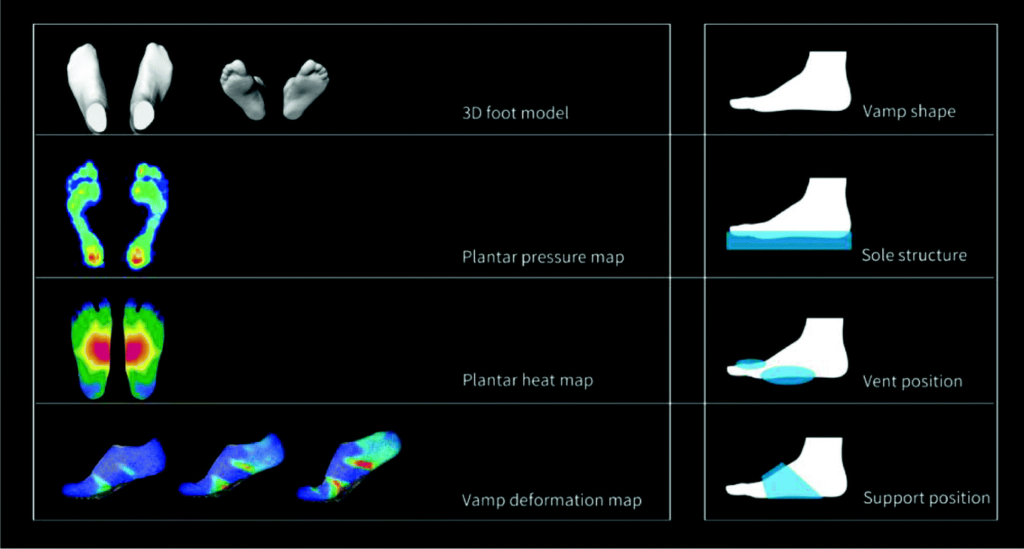

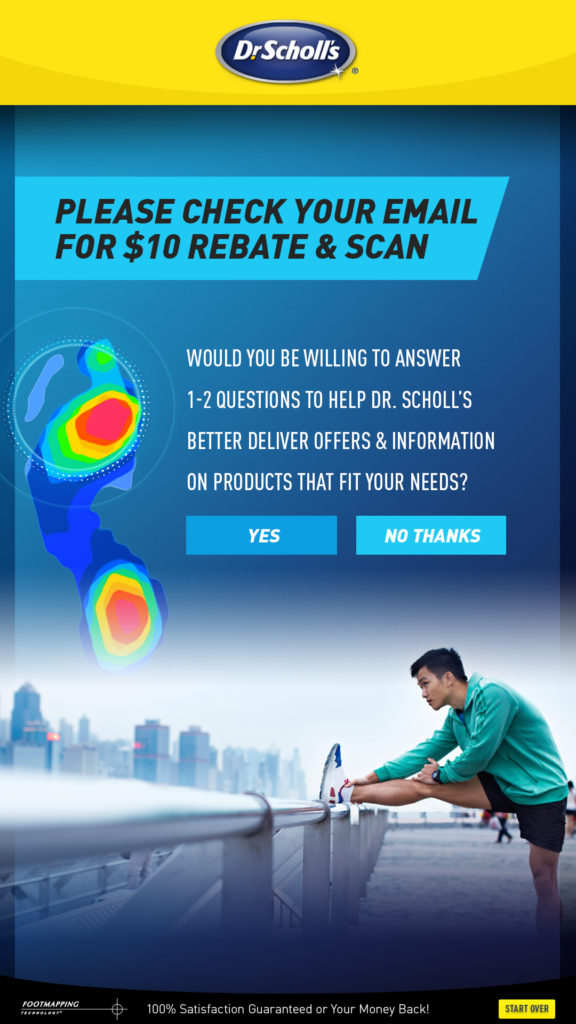

The next piece of technology integrated was a Foot Pressure Mapping System, which uses sensors to measure and analyze pressure distribution on a person's foot for research and product development applications. The system connects to a computer and includes an image capture and analysis software, which further provides more data on how a person stands, and what insole or brace is the best fit for them.

Side by side developmental tests were experimented on, which I became more and more involved with, as the scans needed to be brought to life visually for the user. I created a colorized pressure system which related to the calibrated system within the pressure pad and Microsoft Kinnect camera, which processed all the scientific data in live time. Below are some images of the things we learned and tested to calibrate a true reading of the users weight distribution, and an augment reality avatar of their body form, which would allow for a never before done Motion gesture analysis in this way, which also required my detailed involvement both for graphics, and visual alignment.

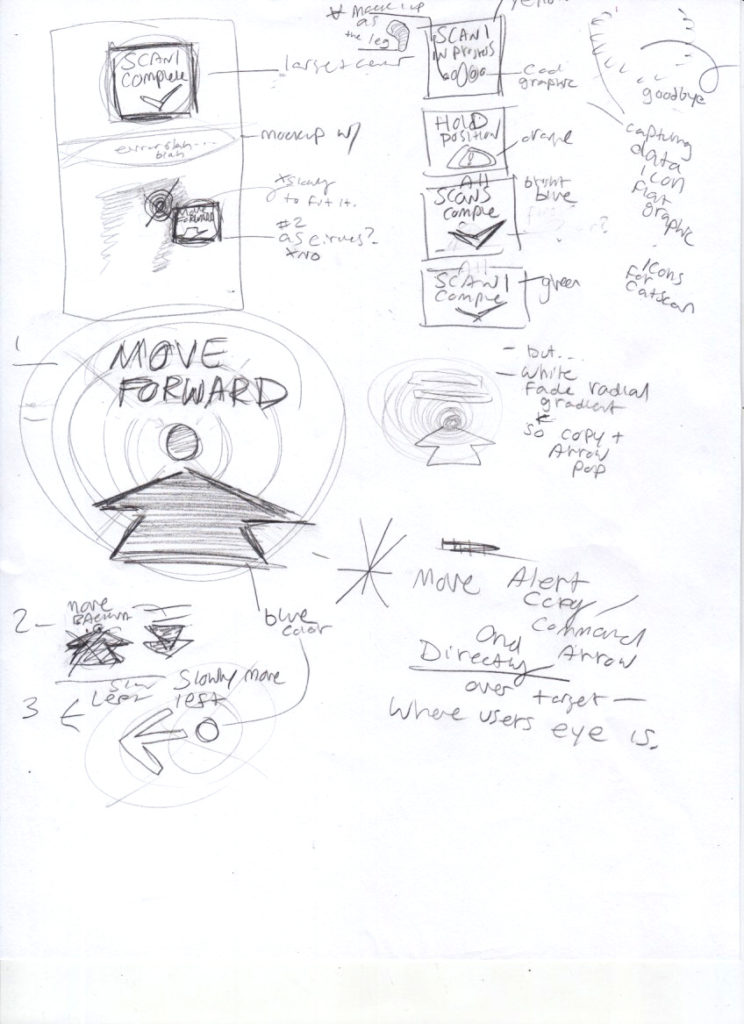

The process began from scratch. Different meetings took place, which gave each branch their input and modifications to meet multiple goals. I was mediator between the company hierarchy to collaborate and visually explain how operations proceeded, with many on-the-fly decisions having to be made. After sketching out a few drafts of screens and interactions, I started prototyping and wire-framing, fleshing out the high fidelity mock-ups, building more screens, and more functionality. I studied and breathed the kiosk for two years, ensuring we could make things as smooth and fast as possible. I also oversaw live user testing. Having a new way of interacting by gesture motion's, needed to be clever and clear for all ages. I immediately envisioned a target, which we could adapt a 3D ball on the screen, which the machine would recognize as a specific point on the body needed to analyze the data. The goal is to move the ball into the circle target through a users body movements. In order for a best and proper scan the machine required specific stances and leg movements in order to process. I provided the design & user experience along the new frontier of technological kiosk's.

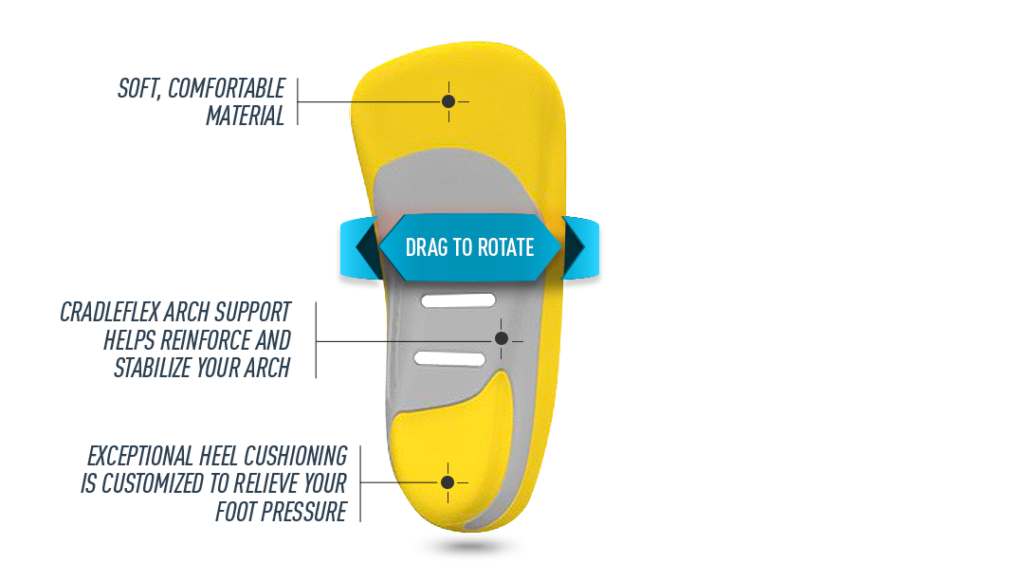

Some of the UI elements, buttons, scanning icons, download bars, directionals, and a 3D insole the user could spin around viewing by gestures.

Design Elements

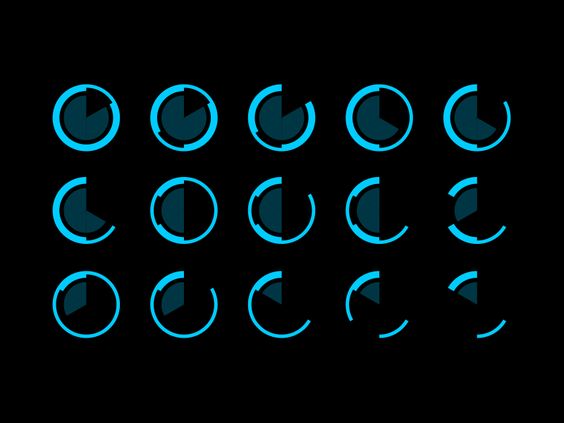

Animating Scanning Elements

(Above) is a loading icon element animation I created and (below) is a gif animation created for the Video loop intro known as the ‘Attractior Loop’. It appears on the intro video around the lady’s leg, depicting a three dimensional scanning process going on to the user.

Instructional User Videos

Certain areas of the user’s journey required functions that needed to be done, so the pressure pads and Kinect Azure cameras could properly acquire all the data needed for a perfect product recommendation to help the user lessen their pain. An example of one of the many 3D animated videos I art directed and worked closely with the animator on the ‘look and feel’ are below.

The New interactive Kiosk uses advanced technology to custom fit products based on a diverse range of users, including everyone's different, heights, weights, & the way that we all stand. With touchless gesture motion interaction, the user steps into a new dimension of experience.

The Old & The New

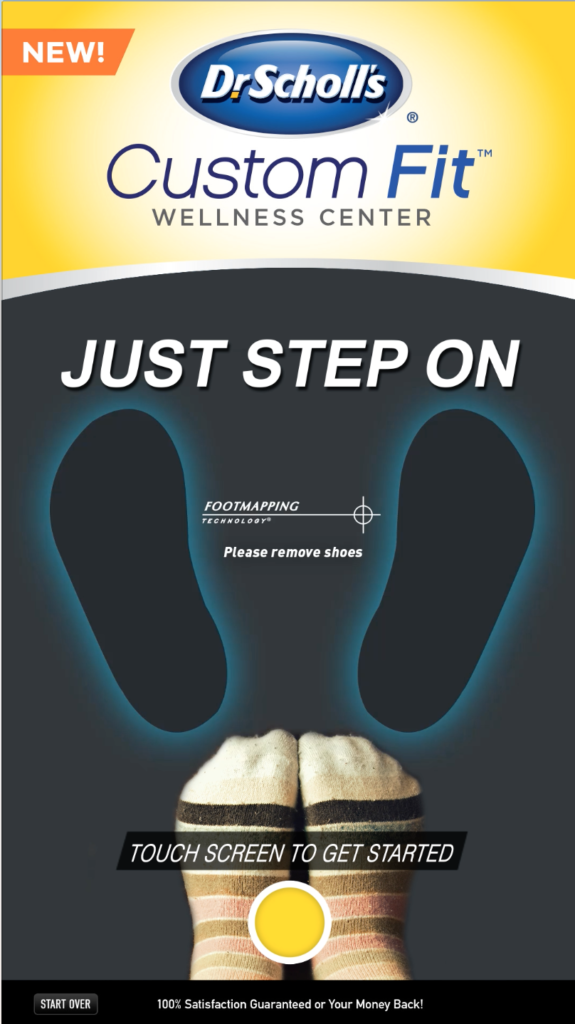

Below is the old kiosk design and the new kiosk with the new UI’UX, which I created from start to completion:

(*Please Note the arrow icon in the middle of the image below, just hover over the left or right to flip between the old & the new.)

A young woman on the Kiosk within a Walmart store, using it quickly on a professionally developed digital user experience. .

In finality, I helped the developers create a visual backend that can be wirelessly updated for seasons, which later on the client subscribed AI technology was integrated further to correctly identify people near the camera, including their emotions too. The little Kiosk that could was moving into the future through merger of machine + human interaction. I left the maintenance team a plan to change graphics based on location and other custom circumstances.